MattWinchell

Sr Member

It's funny because when you think you know something, but realize that you had it completely wrong, your whole world is flipped again.

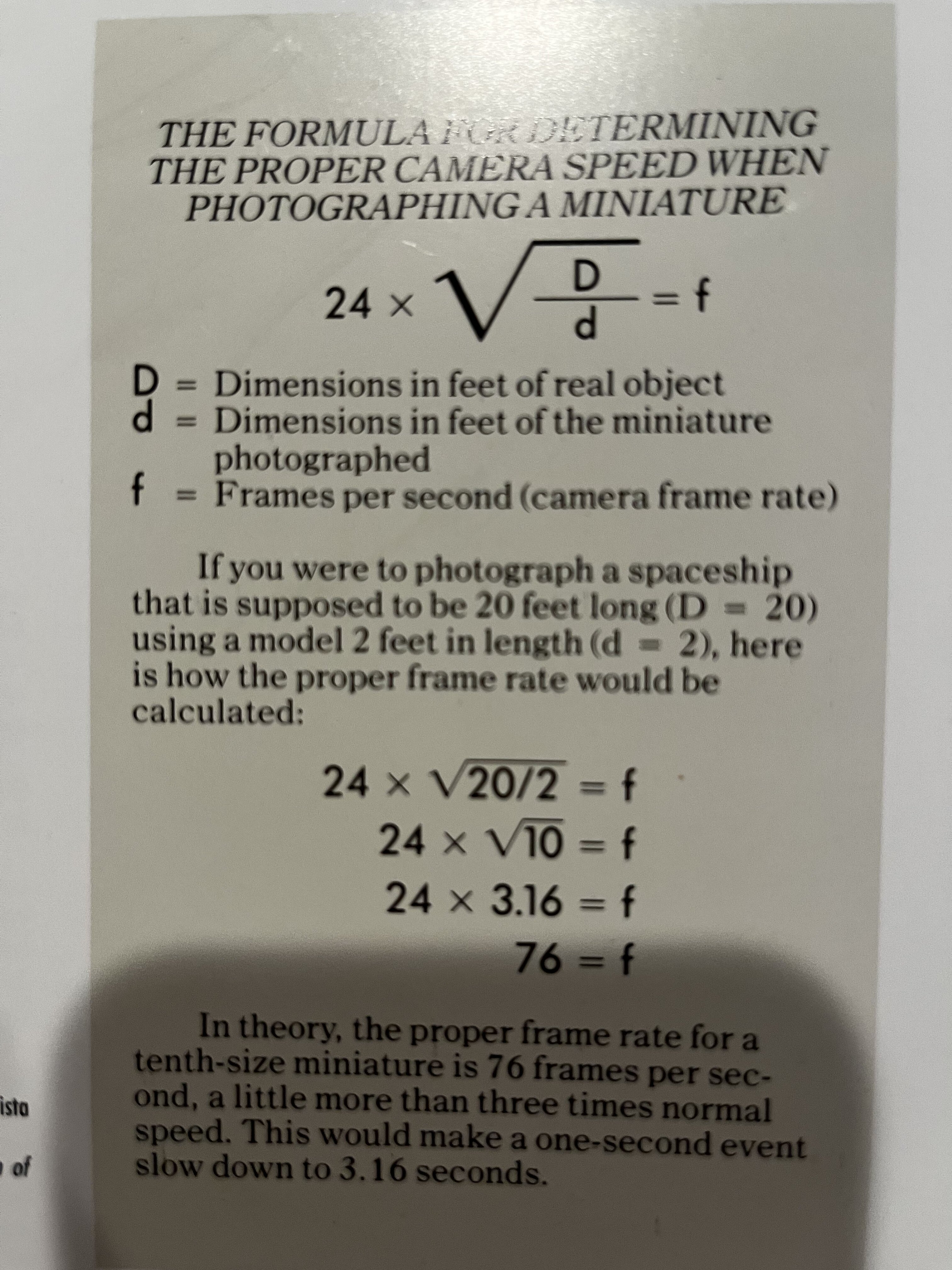

It's even funnier because I had all the answers right in front of me! I actually own a copy of the ILM: Art of Special Effects book and it gives so much insight into the motion-control process. For one, ILM had a base formula for frame rates depending on ship sizes and hints to look out for when shooting

EDIT: Disregard this formula. It has no bearing on what I'm looking for.

"In order to get the maximum f/stop the camera exposures are usually longer than one second each, so a typical shot might take half a day to shoot. With each click of the shutter, the camera advances a fraction of an inch along its sixty-foot rail."

One of the issues I ran into as evident of my video is blue spill. When you have a reflective object in front of a blue screen, often enough, you may find the blue light from the blue screen on the model, so keying out your model is tough as hell. It's so funny that I am running into the same issues as the ILM people did back in the day.

It's even funnier because I had all the answers right in front of me! I actually own a copy of the ILM: Art of Special Effects book and it gives so much insight into the motion-control process. For one, ILM had a base formula for frame rates depending on ship sizes and hints to look out for when shooting

EDIT: Disregard this formula. It has no bearing on what I'm looking for.

"In order to get the maximum f/stop the camera exposures are usually longer than one second each, so a typical shot might take half a day to shoot. With each click of the shutter, the camera advances a fraction of an inch along its sixty-foot rail."

One of the issues I ran into as evident of my video is blue spill. When you have a reflective object in front of a blue screen, often enough, you may find the blue light from the blue screen on the model, so keying out your model is tough as hell. It's so funny that I am running into the same issues as the ILM people did back in the day.

Last edited: